Algorithm-hardware Co-design for Efficient Brain-inspired Hyperdimensional Learning on Edge

Published in 2022 Design, Automation & Test in Europe Conference & Exhibition (DATE), 2022

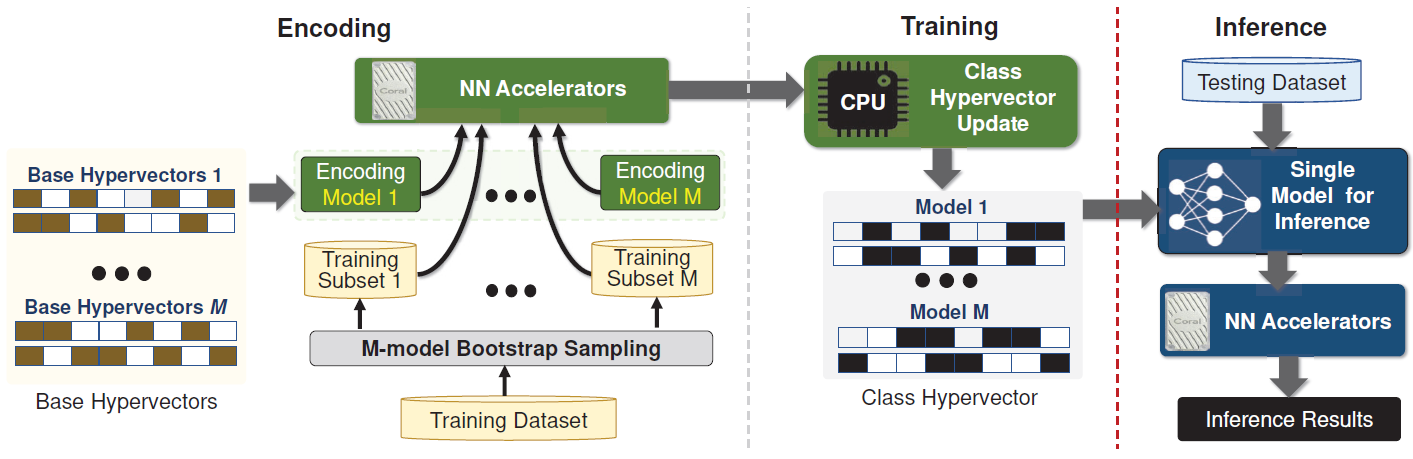

Machine learning methods have been widely utilized to provide high quality for many cognitive tasks. Running sophisticated learning tasks requires high computational costs to process a large amount of learning data. Brain-inspired Hyperdimensional Computing (HDC) is introduced as an alternative solution for lightweight learning on edge devices. However, HDC models still rely on accelerators to ensure realtime and efficient learning. These hardware designs are not commercially available and need a relatively long period to synthesize and fabricate after deriving the new applications. In this paper, we propose an efficient framework for accelerating the HDC at the edge by fully utilizing the available computing power. We optimize the HDC through algorithm-hardware co-design of the host CPU and existing low-power machine learning accelerators, such as Edge TPU. We interpret the lightweight HDC learning model as a hyper-wide neural network to take advantage of the accelerator and machine learning platform. We further improve the runtime cost of training by employing a bootstrap aggregating algorithm called bagging while maintaining the learning quality. We evaluate the performance of the proposed framework with several applications. Joint experiments on mobile CPU and the Edge TPU show that our framework achieves 4.5 × faster training and 4.2 × faster inference compared to the baseline platform. In addition, our framework achieves 19.4 × faster training and 8.9 × faster inference as compared to embedded ARM CPU, Raspberry Pi, that consumes similar power consumption.